Have you ever wondered how much a false positive costs a company on a production line? What about an unplanned shutdown? Everything starts with that question… and if you keep reading, you’ll discover why.

For years, automation meant repetition. Machines were precise, yes, but any change in lighting or in the angle of a part could cause the process to fail. Every adjustment in environmental conditions could generate an error, and what seemed like an improvement in efficiency turned into a limitation.

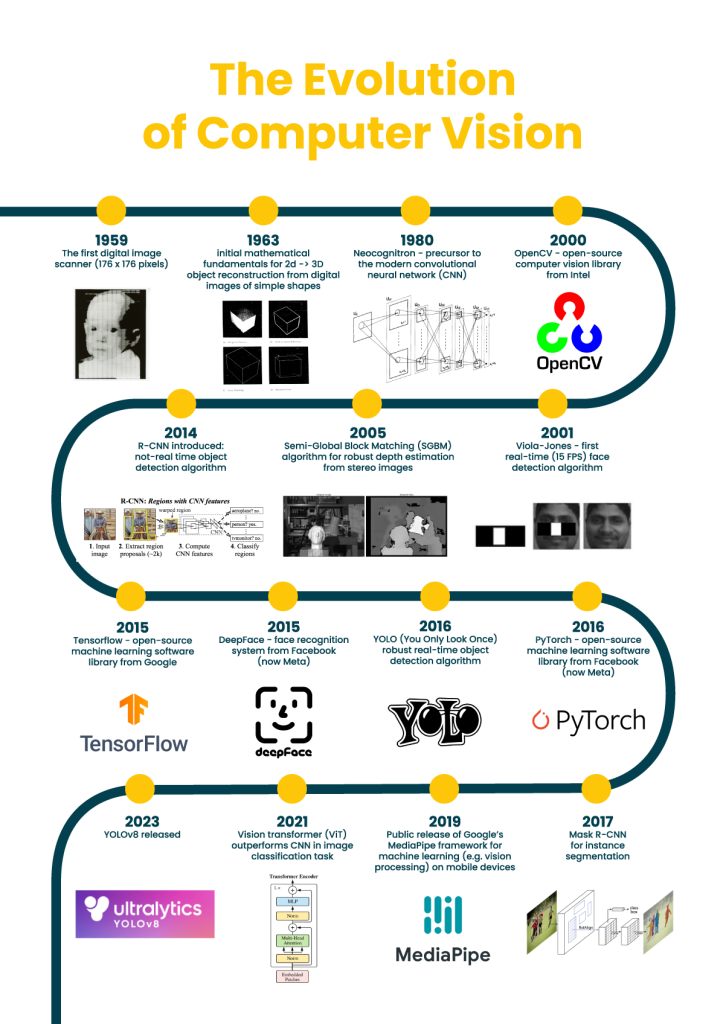

But what if cameras could overcome that barrier? What if, instead of simply capturing pixels, they could “understand” what they see, adapting to changes in the environment in a way similar to the human eye?This is the foundation of the Smart Factory [1]. Thanks to Computer Vision and Deep Learning, machines can be enabled to “understand” what they see. This advancement goes beyond improving accuracy, it eliminates uncertainty: systems that stop merely measuring and start interpreting, capable of distinguishing a real defect from a simple stain, or guiding a robot in dynamic environments, finally adapting to the reality of factories instead of the other way around.

In its early days, traditional computer vision worked under strict rules: look for an edge of X pixels or detect this exact color. Although this approach is still useful in controlled environments, it has a weakness: fragility. A reflection, a change in lighting, or camera vibrations are enough to confuse the system [2].

This is where Deep Learning makes the difference [2]. Thanks to neural networks, the system does not follow fixed rules, but learns patterns directly from data. After training these models with a large number of example images, the model develops its own logic to identify products or defects. As a result, variable lighting or a poorly positioned part is no longer a problem. The system knows what it is looking for, regardless of the conditions.

Image: How defect detection with computer vision works

As Deep Learning has become established in industry, new challenges have also emerged, such as lack of data, the need for faster responses, and the complexity of industrial environments. These challenges have driven the development of new trends in AI and computer vision. Some of the most important ones are:

Generative AI and synthetic data.

As mentioned earlier, for a Deep Learning model to learn how to identify defects such as scratches, deformations, stains, assembly errors, or surface marks, it needs to see a large number of examples. However, collecting images that cover all possible variations, such as lighting changes, angles, or textures, is not always easy.

This is where Generative AI comes into play. Using technologies such as Generative Adversarial Networks (GANs) or diffusion models, it is possible to create synthetic data. These are photorealistic images that simulate all these variations and help build robust models even with limited real data. This reduces implementation time and speeds up system development [3]. In sectors such as automotive, electronics, or packaging, this has become an essential tool for deploying complex vision systems faster.

Language and Vision Models.

Another key trend is the integration of models that combine vision and language. Instead of only classifying or detecting objects, these models can reason about what they see, explain the cause of a problem, assess its severity, and even suggest a recommended action. This allows them to provide real-time diagnostics based on a simple question from an operator. They are also very useful for generating automatic reports, assisting in maintenance tasks, and acting as a natural interface between people and automated systems [4].

Edge AI.

Due to the growing need for immediate responses, many industrial processes require decisions to be made within milliseconds. Examples include rejecting a defective part, stopping a machine, adjusting a robot’s path, or synchronizing a production cell. This has driven the adoption of Edge AI. Thanks to smart cameras and high-performance embedded devices, algorithms run directly on the device itself [5]. This enables faster response times, greater data privacy, and operation without an internet connection, making it a standard in environments where every millisecond matters.

3D Vision.

After many advances in 2D vision, understanding only flat images is no longer enough. The next step is for machines to understand the real geometry of objects, including shapes, volumes, and exact positions in space. This is possible by combining 3D point clouds generated by technologies such as LiDAR, Time of Flight (ToF), or stereo vision with AI. With this information, systems can distinguish between surface defects and volumetric deformations, perform highly accurate measurements, and understand the spatial orientation of complex parts.

One of the most representative applications of this trend is bin picking [6]. In this task, a robot learns to “see” a container filled with randomly placed parts, identify each one, calculate its orientation, and pick it up in an optimal way.

Multispectral and hyperspectral vision.

New challenges have also emerged related to analyzing the internal properties of materials, such as chemical composition, moisture, wear, or temperature. These properties go beyond the visible spectrum. As a result, cameras that capture infrared, ultraviolet, thermal, or even hundreds of spectral bands simultaneously have gained importance [7].

This technology is mainly used in maintenance, for example to detect hot spots in motors or electrical panels. It is also used to differentiate materials that look identical to the human eye, classify food based on ripeness, or identify impurities and internal defects [7].

Vision Transformers (ViT).

Detecting very small defects on complex surfaces, such as polished metals, electronic boards, or composite materials, remains a challenge for traditional machine vision. Vision Transformers (ViT) represent an evolution of Deep Learning that addresses this problem. Thanks to their ability to capture global relationships within an image, they offer higher accuracy and reduce false positives. This is critical in industries with strict quality control requirements [8].

Digital Twins and vision.

In many cases, it is difficult to anticipate failures in machines, production lines, or entire systems, leading to unexpected downtime and high costs. Digital Twins address this problem. They are interactive virtual replicas of equipment or processes that are fed with real data. In this context, vision systems act as their “eyes.” Cameras monitor vibrations, wear, speed, part geometry, and even micro-defects, providing the data needed for the Digital Twin to simulate the future state of the system [9].

For example, if the system detects that a conveyor belt is vibrating 5 percent more than normal, the AI calculates the probability of failure and estimates when it may occur. When the risk becomes critical, a preventive maintenance order is generated, helping to reduce unplanned downtime.

AI for human–robot collaboration and safety.

Another growing trend is the use of vision and AI to protect people and improve collaboration with robots and machines. Key applications include:

- Collaborative robots: automatic speed reduction or stopping when a person enters the work area, using human presence detection and pose estimation [10].

- PPE compliance: real-time verification of helmets, vests, gloves, or safety glasses.

- Ergonomic analysis: identification of risky postures and repetitive tasks, with suggestions to prevent injuries.

As a result, machine vision is no longer focused only on the product. It is increasingly centered on safety and the well-being of people.

Computer Vision has evolved from simple inspection to interpretation and anticipation, transforming industrial production. What began as an effort to detect defects has become an ecosystem of technologies that speed up system deployment, enable decisions within milliseconds, and capture the geometry and internal composition of materials. The integration of AI and machine vision not only improves quality, but also transforms how factories operate, making them safer, more efficient, and more intelligent.

Thank you for joining us on this journey through the technologies that are redefining the industrial sector. We hope this transition from simply “seeing” to “understanding and anticipating” inspires you to transform your processes. The Smart Factory is not a futuristic vision, but a present-day reality, ready to be built.

References:

[1] Qué es una Smart Factory y en qué consiste la fabricación inteligente | UNIR

[2] Historia de Vision AI: De la detección de bordes a YOLOv8

[3] La inteligencia artificial generativa y su impacto en la creación de contenidos mediáticos

[4] https://arxiv.org/pdf/2501.02189

[5] https://arxiv.org/pdf/2407.04053

[7] https://www.haip-solutions.com/hyperspectral-cameras/blackindustry-swir/

[8] https://www.ultralytics.com/es/glossary/vision-transformer-vit

[9] https://es.mathworks.com/campaigns/offers/next/digital-twins-for-predictive-maintenance.html

[10] https://www.tekniker.es/es/la-seguridad-en-entornos-colaborativos-persona-robot